The Non-Determinism Crisis: Why Your AI Agents Are Schrödinger's Software

Fri, Feb 6, 2026

Your AI agent passes every test. It handles edge cases beautifully. The demo goes perfectly. You deploy to production. Customers start receiving refunds for products they never purchased. Welcome to the non-determinism crisis—where your software works exactly as designed, except when it doesn't, and there's nothing you can do about it.

The problem isn't that AI agents are unpredictable. The problem is that we've spent forty years building software under the assumption that identical inputs produce identical outputs. Now we're deploying systems where that fundamental guarantee doesn't exist, and we're pretending it's just another engineering challenge.

The Determinism Delusion

Traditional software is deterministic. If x = 2 and y = 3, then x + y always equals 5. Always. This isn't just a nice property—it's the foundation of everything we know about debugging, testing, and maintaining software. When a bug occurs, we can reproduce it. When tests pass, we know the system will behave the same way in production.

AI agents broke this covenant. The same prompt, given to the same model, with the same parameters, can produce different outputs. Not because of cosmic rays or memory corruption, but because non-determinism is a feature, not a bug. The stochastic nature of neural networks isn't an implementation detail—it's what makes them work at all.

But here's where it gets philosophically interesting: we've built our entire software engineering discipline on the assumption that determinism equals reliability. We test exhaustively because we believe that covering all edge cases guarantees correct behavior. We version control because we assume code changes produce predictable results. We monitor because we expect systems to behave consistently over time.

AI agents laugh at these assumptions.

The Production Reality Check

Three months ago, we deployed a customer service agent that had been tested across 10,000 conversations. Success rate: 97.3%. Customer satisfaction scores through the roof. The 2.7% failure cases were mostly edge cases—customers speaking in dialects, unusual product combinations, that kind of thing.

Within 48 hours of production deployment, we saw our first "phantom refund." A customer contacted us about a refund they received for a product they loved and wanted to keep. The agent had initiated a full refund, plus expedited replacement, plus store credit, because it "detected customer dissatisfaction" from a perfectly neutral support inquiry.

The kicker? We couldn't reproduce the behavior. Same customer, same conversation history, same product details—different result. The agent worked perfectly in our test environment, continued working perfectly for 99.7% of customers, but occasionally decided to give away the company store.

This isn't a quality assurance problem. It's a fundamental property of the system. When you deploy non-deterministic software, you accept that some percentage of executions will produce results that no amount of testing could have predicted.

The Philosophical Rabbit Hole

The non-determinism crisis forces us to confront questions that software engineers haven't had to ask since the dawn of computing. What does it mean for software to be "correct" when identical inputs can produce different outputs? How do you debug a system where the bug might never occur again? What does "production-ready" mean when perfect testing guarantees nothing?

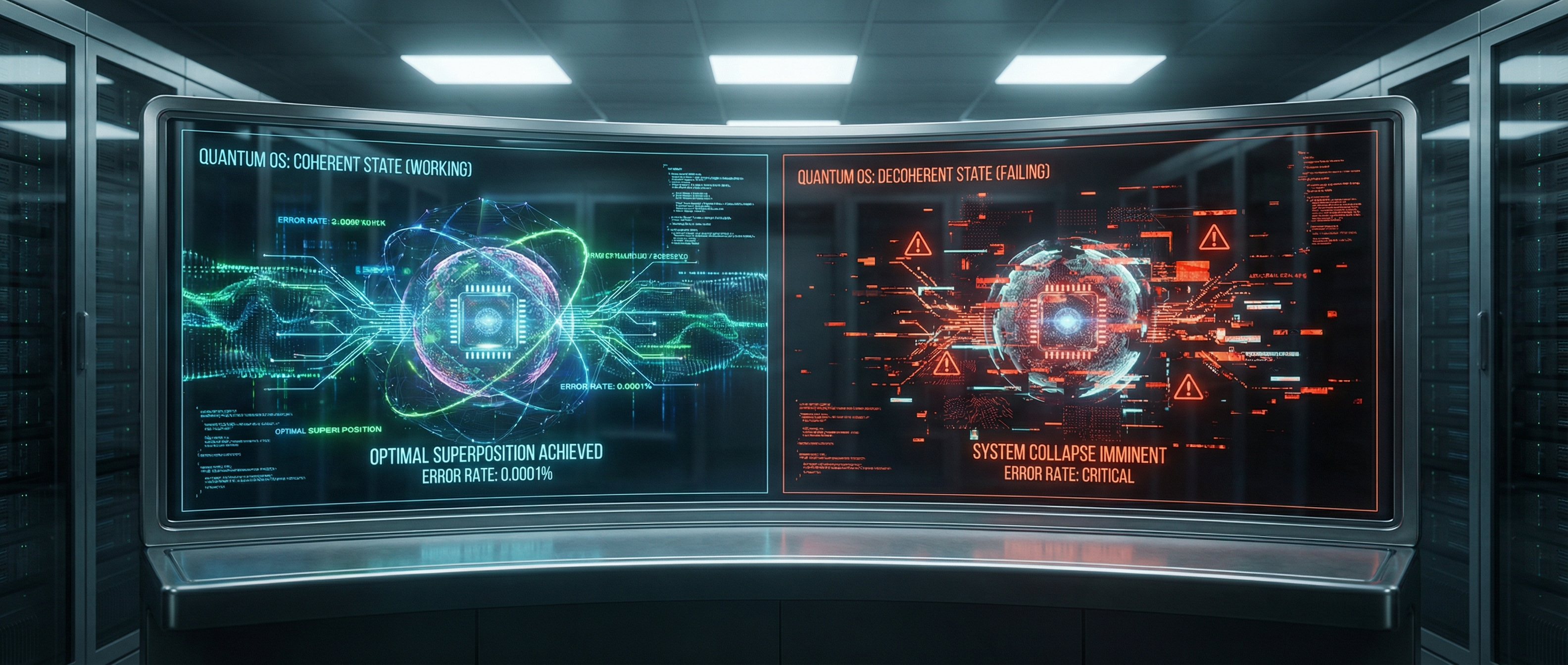

We're dealing with Schrödinger's Software—systems that exist in superposition between working perfectly and failing catastrophically until observed in production. The act of deployment collapses the wave function, but you don't know which outcome you got until customers start complaining.

This isn't just engineering pedantry. It represents a fundamental shift in what software is. Traditional software is a set of instructions that transforms inputs to outputs predictably. AI agents are more like artificial employees—they have good days and bad days, they learn and adapt, they make decisions based on incomplete information, and sometimes they just have brain farts.

The Testing Paradox

Here's where the crisis becomes existential: our entire testing methodology assumes reproducibility. When a test fails, we investigate the failure, fix the code, and run the test again to verify the fix. When a test passes, we assume the functionality works and move on.

But non-deterministic systems break this cycle. A test that passes today might fail tomorrow for reasons that have nothing to do with code changes. A test that fails might pass when you run it again. The correlation between test results and production behavior becomes statistical rather than absolute.

We've responded by throwing statistics at the problem. Run each test 100 times. Measure the distribution of outcomes. Set thresholds for acceptable variance. But this misses the point: we're trying to apply deterministic quality standards to non-deterministic systems.

The uncomfortable truth is that we've built our entire software engineering discipline on foundations that AI agents render invalid. It's like trying to apply bridge-building engineering principles to weather prediction. The tools, techniques, and mental models simply don't transfer.

The Monitoring Mirage

Traditional monitoring assumes that when metrics deviate from normal ranges, something is broken and needs fixing. But what constitutes "normal" for a non-deterministic system? When your AI agent processes 1000 customer complaints and handles 997 of them perfectly, is the 0.3% failure rate a problem that needs solving, or just the cost of doing business with probabilistic software?

We've spent decades building sophisticated monitoring systems that alert us when systems behave abnormally. But "abnormal" for an AI agent might just be "Tuesday." The system that worked perfectly yesterday might produce completely different results today, even though nothing has changed except the phase of the moon or the stock price of some unrelated company.

The monitoring challenge goes deeper. When you detect that an AI agent is behaving suboptimally, what do you do? You can't just "fix the bug" because there might not be a bug to fix. The system might be working exactly as designed, producing perfectly valid results that happen to be different from what you expected.

The Organizational Earthquake

The non-determinism crisis isn't just technical—it's organizational. We've built companies around the assumption that software behaves predictably. Product managers write requirements assuming that features work consistently. Customer support teams troubleshoot assuming that problems can be reproduced. Legal departments assess risk assuming that software behavior can be controlled.

AI agents break all of these assumptions.

When a customer support agent gives away $50,000 in unwarranted refunds, who takes responsibility? The engineer who deployed the system? The product manager who specified the requirements? The executive who approved the project? The AI itself? We've created systems that can make expensive decisions autonomously, but we haven't evolved our accountability models to match.

The legal implications are particularly thorny. Traditional software liability assumes that bugs represent defects that could have been prevented with better engineering. But how do you assign liability for a system that works correctly 99.7% of the time and fails spectacularly the remaining 0.3%? Is that a defect, or just the statistical reality of working with probabilistic systems?

The Path Forward (There Isn't One)

Here's where I'm supposed to offer solutions. Techniques for managing non-deterministic systems. Best practices for testing AI agents. Monitoring strategies for probabilistic software. But that would be missing the point: we're not dealing with a problem that has a solution. We're dealing with a fundamental paradigm shift that renders our existing solutions obsolete.

The non-determinism crisis isn't a technical challenge to be solved—it's a new reality to be accepted. AI agents will never be deterministic. They will never be fully testable. They will never behave consistently. And yet, they might still be worth deploying because the benefits outweigh the costs.

This represents a psychological shift more than a technical one. We need to stop treating AI agents like traditional software and start treating them like artificial employees. You don't "test" an employee in the way you test software—you evaluate their performance statistically, you provide training and feedback, you set up guardrails and oversight, and you accept that sometimes they'll make mistakes.

The question isn't "how do we make AI agents deterministic?" The question is "how do we build organizations that can thrive with non-deterministic software?"

The New Engineering

If we're going to survive the non-determinism crisis, we need to evolve new engineering practices that accept rather than fight the probabilistic nature of AI agents:

Statistical Quality Assurance: Instead of asking "does this feature work?" we need to ask "what percentage of the time does this feature produce acceptable results?" Acceptable error rates become a design parameter, not a failure mode.

Continuous Adaptation: Rather than deploying static systems, we need to build organizations that can continuously monitor, evaluate, and adjust AI behavior. The system you deploy today won't be the system you're running next month, and that's okay.

Probabilistic Risk Management: Traditional risk assessment assumes that failures can be prevented. With AI agents, we need to assume that failures will occur and focus on minimizing their impact rather than preventing them entirely.

Human-AI Collaboration: The most successful AI deployments don't replace human judgment—they augment it. Humans handle edge cases, provide oversight for critical decisions, and step in when agents behave unpredictably.

The Bottom Line

The non-determinism crisis isn't going away. As AI agents become more capable and more autonomous, we're going to deploy systems that work perfectly except when they don't, and we'll have no way to predict or prevent the failures.

This isn't a failure of engineering—it's a fundamental property of intelligence. Human employees are non-deterministic too. They have good days and bad days, they make decisions that seem reasonable at the time but prove catastrophic in retrospect, they occasionally give away the company store through a combination of good intentions and poor judgment.

We've learned to build organizations that function despite human non-determinism. Now we need to learn to build organizations that function despite artificial non-determinism.

The future belongs to companies that can thrive with Schrödinger's Software—systems that exist in superposition between brilliant success and catastrophic failure until observed in production. The non-determinism crisis isn't a problem to be solved. It's the price of admission to the age of intelligent software.

Welcome to the new reality. Same as the old reality, but with better marketing.

Are you dealing with non-deterministic AI agents in production? How do you handle the uncertainty? Or are you still pretending that better testing will solve the problem? Let's discuss the philosophical implications in the comments.